Face Detection & Counting

- Nov 13, 2016

- 3 min read

Once we have the flattened image, or even for the non-flattened image, the next step is to carry out face detection. Logically, how should that be carried out? There are several ways of carrying it out, for example scanning every pixel and matching different sets of pixels to a predefined pattern. However, there is no point writing an algorithm to scan pixel by pixel as it would be akin to reinventing the wheel. In the past, programmers working on image processing started out by experimenting with this, and today there are much more robust and efficient algorithms to detect faces.

Face detection today is done by using Haar Classifiers. It is a very innovative approach to achieving face detection. The basic concept is that it categorizes subsections of images and uses rectangular regions of the image and compares the pixel intensities. There is a lot more that goes on behind the scenes but it will not be covered in depth for the purpose of this report, as we have made used of openly available XML files with the Haar Classifier information for face detection.

We have written face detection code in Python 2.7 with OpenCV. It is able to detect faces based on detecting a face and then detecting the eyes and mouth for verification. If one were to cover their eyes or mouth by obstructing any of their important features such as the edges and lines which make up the facial features the algorithm searches for.

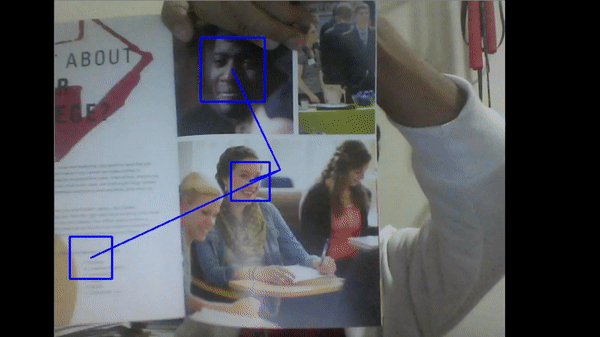

Despite the image received by the SJ4000 being distorted, the face detection algorithm is able to detect faces on a magazine we held up close to the lens at first. However, as we move it around and orientate the image differently, we find that the detection is rather erratic. We will be running detailed tests and gathering data on its efficiency and will be comparing it with a flat lens. However, at this juncture for the draft report, we have not carried out these tests just yet.

We applied the same face detection code to the laptop webcam and observed the effects. It should be noted that in the above figure, there is a square in the bottom left which detects a face but it disappears after few milliseconds.

A problem with this algorithm is that when some factors align with one another – brightness, contrast, motion blur, to name a few – a face would be “detected” despite a face not actually existing within the region. This is treated as noise and will be filtered out. A characteristic of these errors is that they only occur in spikes of a few tens to hundreds of milliseconds.

As can be seen in the images above, lines are drawn from the centre of the screen to the centre of the detected face. This line is treated as a vector, and will be sent to the velocity control script in ROS. This data is used to control the gimbal and the drone to rotate and travel in the correct direction.

One thing to note is that the response for this operation is not instantaneous as there is computing time involved in processing the image and computing the various directional vectors to move the drone in. Hence there is some latency in the decision making process of the drone. Hence it will need to travel at a relatively slow speed in order for it to account for its own safety radius and prevent erroneous readings from being evaluated and used in the velocity control algorithm.

Face counting is achieved easily as we are able to return the total number of squares generated within an image, which is a reflection of the total number of faces present in that image.

Comments